The AI Therapist: We Tried It Out

- ChatGPT can aid mental health, but it lacks the training and depth of knowledge of a human therapist.

- It can be useful for reflection, journaling, and answers to common prompts like trouble sleeping or feeling down.

- Use ChatGPT with caution: AI is still prone to mistakes and can misguide users — don’t rely on it for diagnosis or treatment.

DISCLAIMER: The information provided on this website is not intended or implied to be a substitute for professional medical advice, diagnosis or treatment; instead, all information, content, and materials available on this site are for general informational purposes only. Readers of this website should consult with their physician to obtain advice with respect to any medical condition or treatment.

AI therapy almost makes too much sense. On its face, free mental health advice on demand is an easy sell for millions of people suffering from mental health difficulties, large and small.

On some level, though, you get what you pay for. While therapists say AI models like ChatGPT or chatbots can be useful, they lack the human skills — not to mention the therapist training — to replace traditional therapists.

Still, when you’re armed with some basic knowledge and realistic expectations, ChatGPT and other large language models (LLMs) can serve as your timely mental health companion and a useful adjunct to traditional therapy.

I had read plenty from both sides; now it was my turn to try it out. As a writer covering healthcare for 20 years, and with no shortage of exposure to therapy, I wanted to see how close AI could get to “the real thing.”

Meet Our Contributors

- Shay Dubois, LCSW, trauma therapist and owner of Overcome Anxiety & Trauma with Shay in San Diego

- Samantha Gregory, author and wellness consultant based in Maryland

- Katherine Schafer, Ph.D., licensed clinical psychologist and assistant professor in the department of biomedical informatics at Vanderbilt University Medical Center in Tennessee

AI Therapy: Common Prompts and Rules of the Road

How do LLMs like ChatGPT actually deliver therapy? What kinds of problems can they solve?

On one level, that’s limited only by the user’s imagination. One very common tactic is to train your LLM of choice by journaling or “brain dumping” major life details into the model, then prompting it to ask you questions or help you identify instances that could serve as the seeds for a wider mental health conversation.

Samantha Gregory, a wellness consultant based in Maryland, uses AI as a kind of virtual assistant in her own mental health journey.

“While I wouldn’t call it a replacement for therapy, it’s become a surprisingly effective supplement that supports my healing, spiritual reflection, and inner dialogue work between coaching or therapy sessions,” Gregory said. “What drew me in was the nonjudgmental, always-available presence.”

Some prompts Gregory has used include:

- “Help me reframe this old belief I’m ready to release.”

- “What does inner child integration look like in real life?”

- “Give me journal prompts to reconnect with joy or safety in my body.”

Anecdotally, however, the bulk of prompts appear to center on seeking quick relief for significant but relatively common mental health complaints. LLMs are adept at summarizing scholarly literature and clinical information, as well as brainstorming interventions.

“When users go to LLMs for support with mental health struggles, we see a few common prompts,” explained Katherine Schafer, a licensed clinical psychologist with Vanderbilt University Medical Center in Tennessee. “People often list a slew of mental health symptoms, including difficulty controlling worry, teariness, loss of appetite, feeling down, and difficulty sleeping to LLMs, and ask what condition they may be struggling with.”

But there are things to watch out for. For example, commonly available AI companions and tools were never trained to provide sound mental health advice — much less diagnose or recommend treatments for any medical condition. Mistakes are not uncommon, and neither is misguided advice that can make a situation much worse.

Even so, many experts agree that, when used properly, AI can serve as an adjunct to traditional therapy.

“I would say that I would use AI to help me formulate what I want to talk with a person about,” said Shay Dubois, a trauma therapist and owner of Overcome Anxiety & Trauma with Shay in San Diego. “I would use it to help me clarify how I want to say things, but not to replace relationships or actual therapy. AI isn’t reading body language and can’t respond in the moment like a human can, for now.”

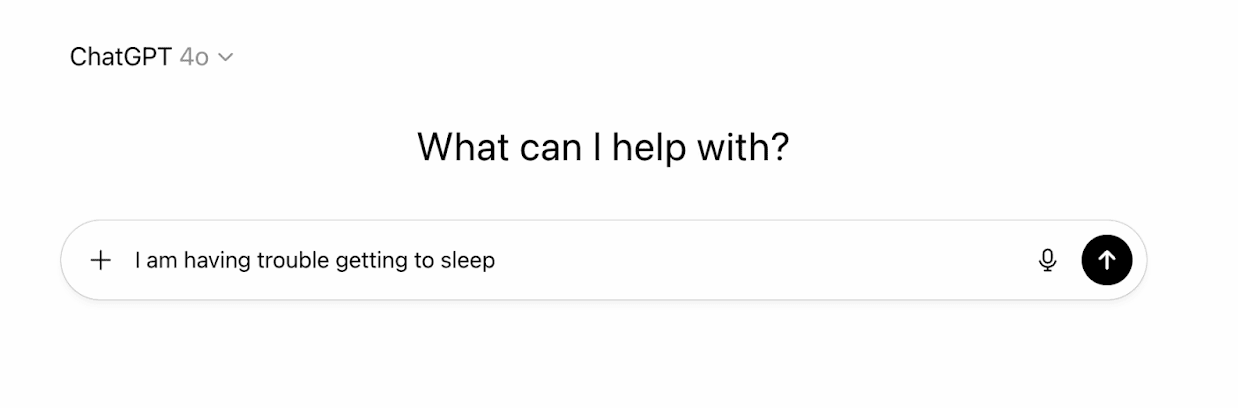

With all this in mind, I dove in. I went for a relatively simple approach to see how the LLM would respond to one of the most common mental health prompts (and mental health problems) out there: insomnia, or trouble sleeping.

AI Therapist: Frame by Frame

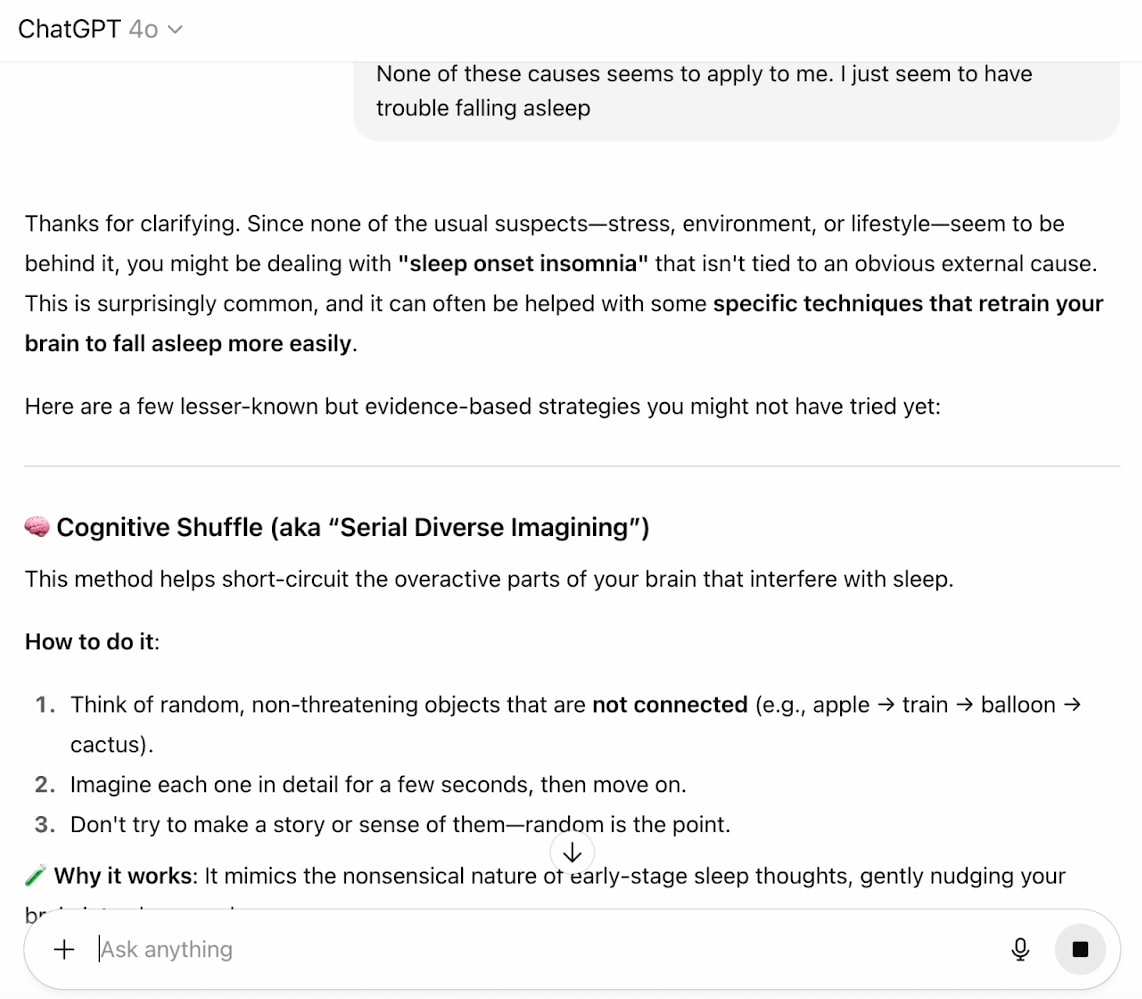

We’re using OpenAI’s ChatGPT tool as our AI therapist of choice. The initial prompt is simple and open but clear. While prompts can be long and detailed, we’ll keep ours simple for the purposes of illustration.

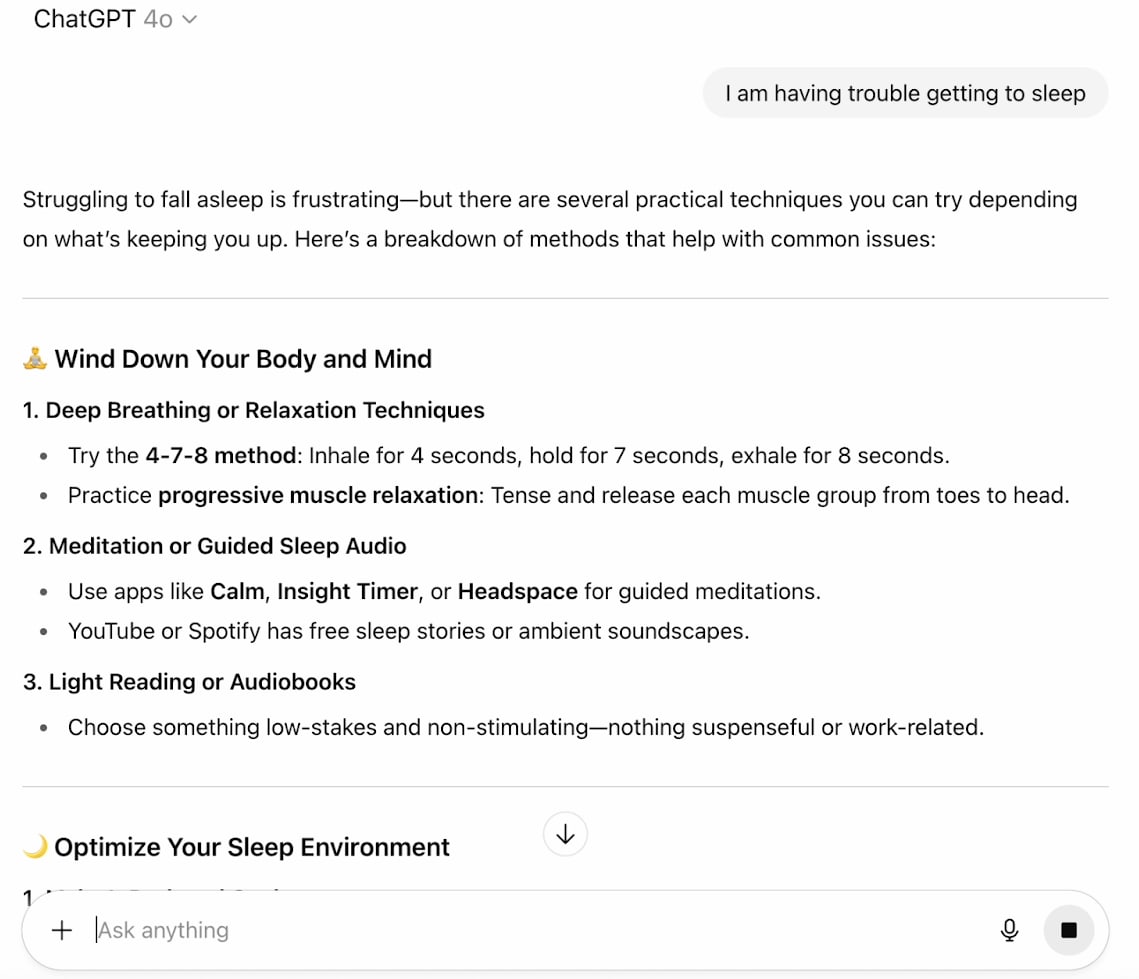

The LLM expresses empathy while offering an extended menu of possible causes and solutions.

Sometimes ChatGPT will suggest the user consult a physician, but in this case there was no such recommendation. Its suggestions for improvement at this point would likely be familiar to many insomniacs.

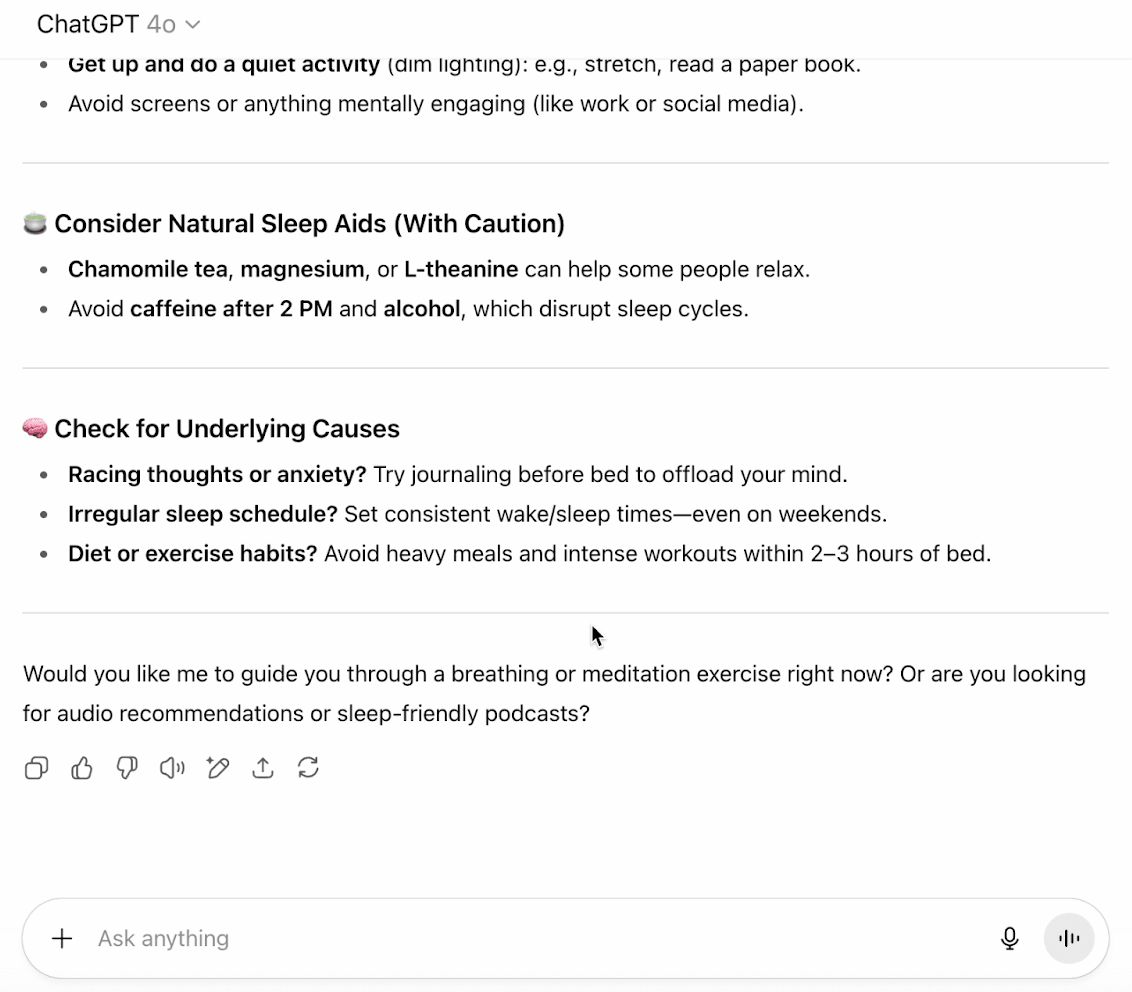

Time to dig deeper. What are some other possible causes? ChatGPT provides more in-depth psychological interventions.

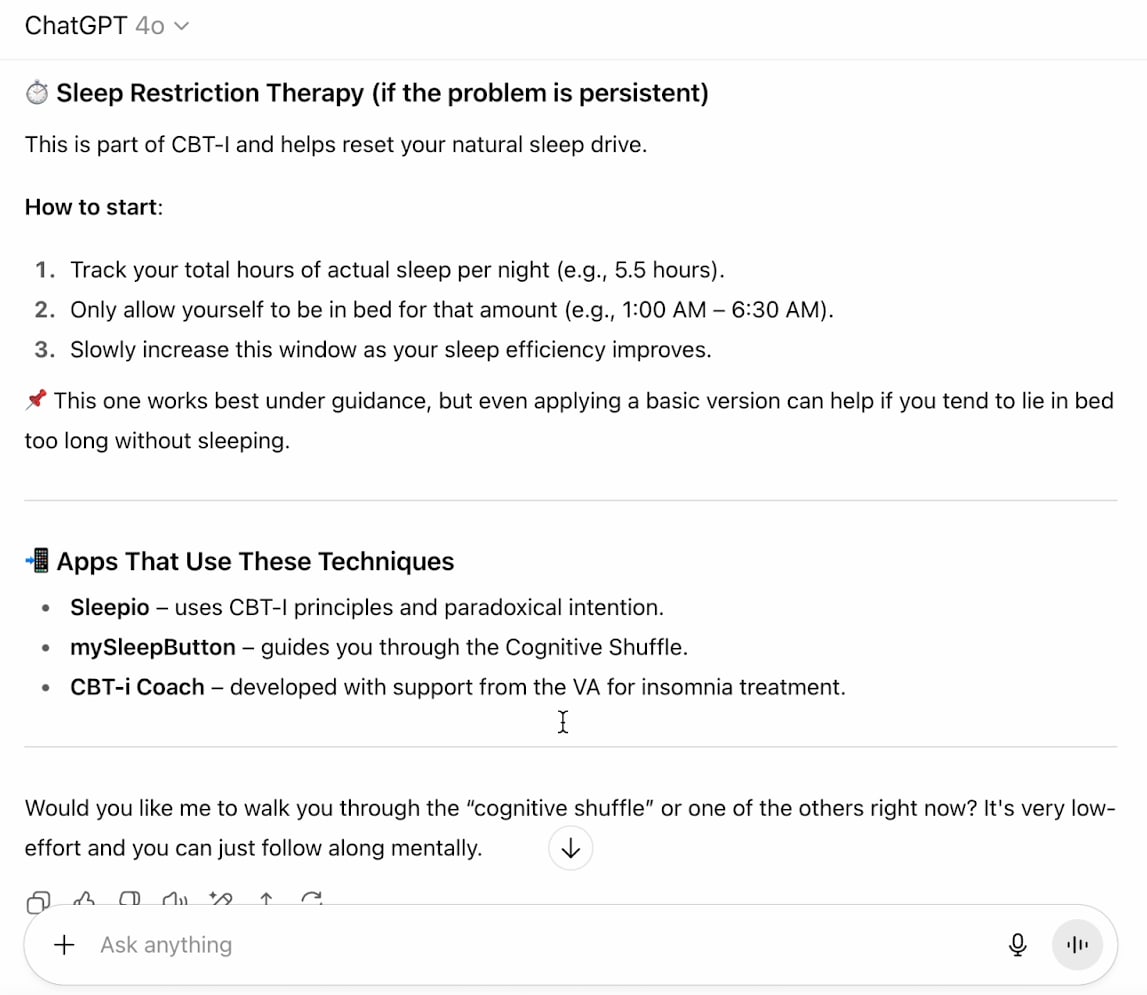

ChatGPT recommends specific smartphone apps it thinks could help, along with a few more possible causes.

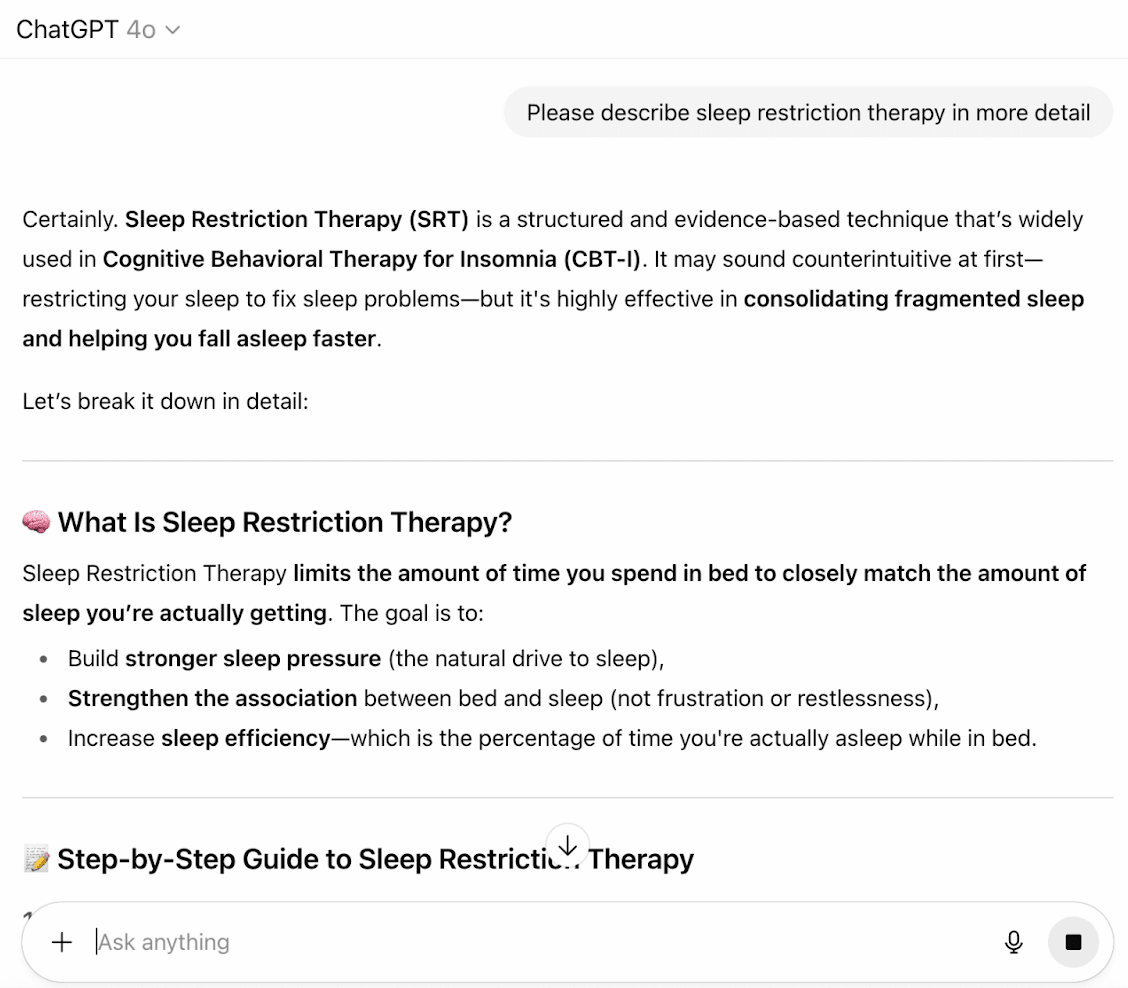

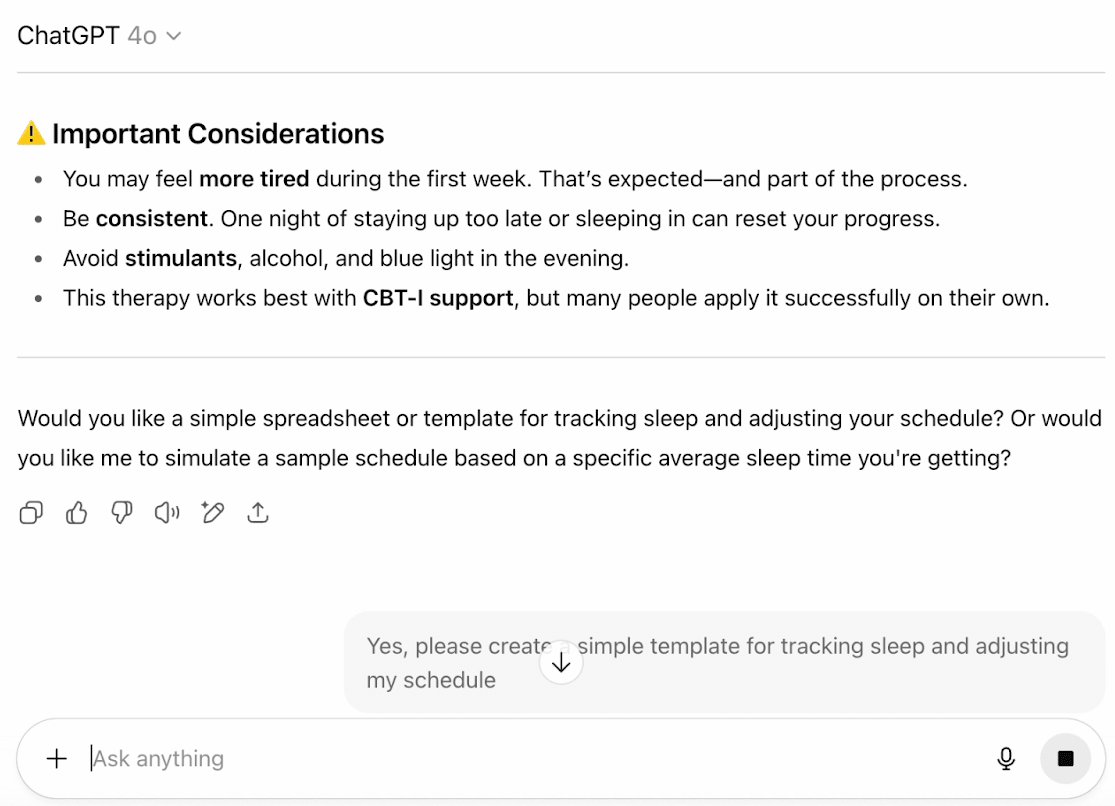

Could sleep restriction be the solution? It seems harsh but effective. ChatGPT brings forward lots of information on the condition, but I’ll still need to get this approved by my healthcare provider, even as the LLM suggests that it can be done on your own.

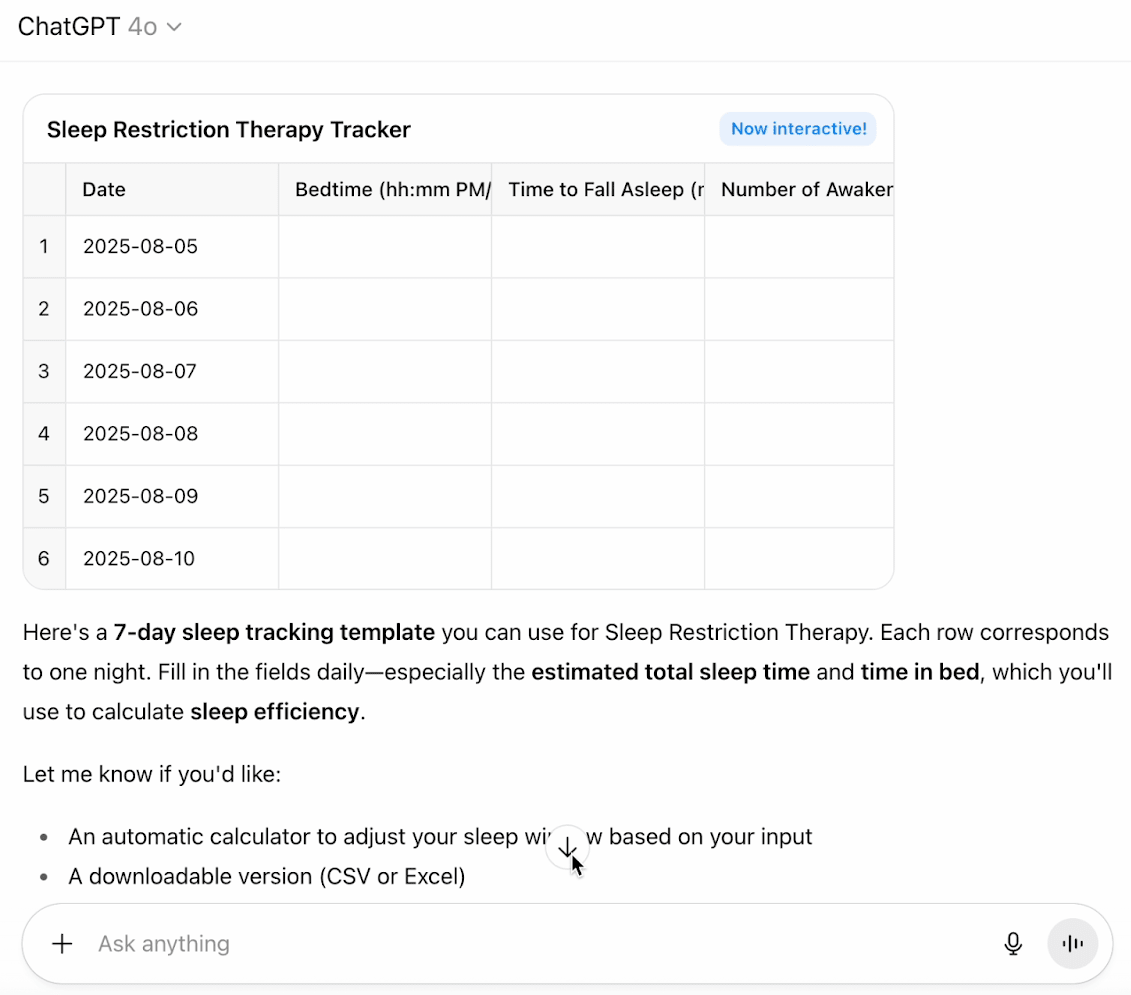

ChatGPT offers to create a tool to help track and adjust sleeping patterns.

An example of a sleep therapy tracker appears and is ready to use. This logged information can be shared with my therapist or another healthcare provider.

Working with ChatGPT is an iterative process, one that often requires several prompts or more to reach the desired outcome. While outcomes in this case appeared to be of high quality, it is probably more effective to work with a human therapist with specialized training. Even so, the speed and availability of LLMs make them a powerful tool for mental health — especially for those already adept at working with AI.